Hanyu Zhou 周寒宇Research Fellow

School of Computing (SoC), |

|

Biography

I am currently a research fellow at National University of Singapore (NUS), working closely with Prof. Gim Hee Lee.

I was a research intern at Shanghai AI Lab in 2024, working closely with Bin Zhao.

I got the Ph.D. degree at Huazhong University of Science and Technology (HUST) in 2024, advised by Prof. Luxin Yan. Before that, I got the B.Eng. degree at Central South University (CSU) in 2019.

I am now working on event-based and 4D vision for scene perception & understanding & planning, if you have an excellent project for collaboration, please email me!

I got the Ph.D. degree at Huazhong University of Science and Technology (HUST) in 2024, advised by Prof. Luxin Yan. Before that, I got the B.Eng. degree at Central South University (CSU) in 2019.

I am now working on event-based and 4D vision for scene perception & understanding & planning, if you have an excellent project for collaboration, please email me!

Interests

Embodied AI, 3D & 4D Vision, Multimodal LLM, Generative AI, Event Camera, Domain Adaptation.

News

- 2026.01, Our event-based imaging enhancement paper NEC-Diff is accepted to CVPR'26, congrating to Haoyue.

- 2026.01, Our 4D large multimodal model LLaVA-4D paper is accepted to ICLR'26.

- 2025.12, I was invited to give a talk at School of Computer Science in Shanghai Jiao Tong University.

- 2025.12, I was invited to give a online talk at academic media"具身智能之心".

- 2025.11, I was invited to give a talk at College of Computer Science in Sichuan University.

- 2025.10, I was invited to give a talk in Workshop on Imaging, Processing, Perception, and Reasoning for High-Dimensional Visual Data at ACM Multimedia Asia'25.

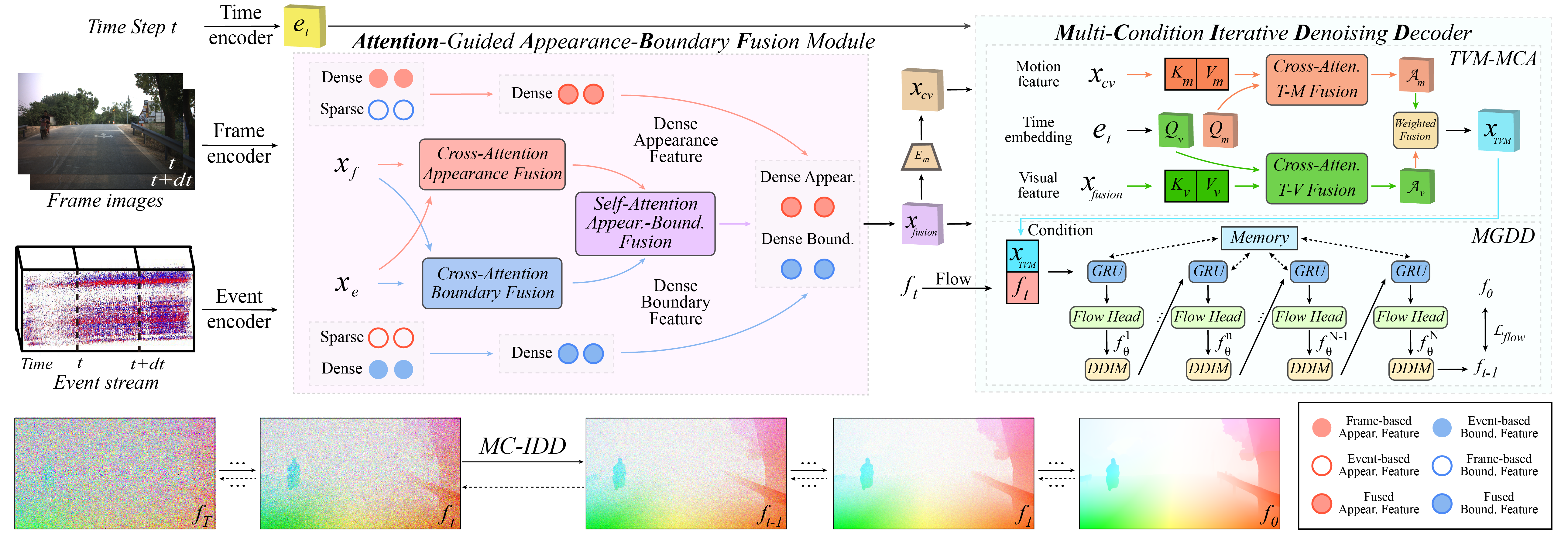

- 2025.09, Our diffusion-based optical flow with frame-event fusion is accepted to NeurIPS'25 (Spotlight), congrating to Haonan.

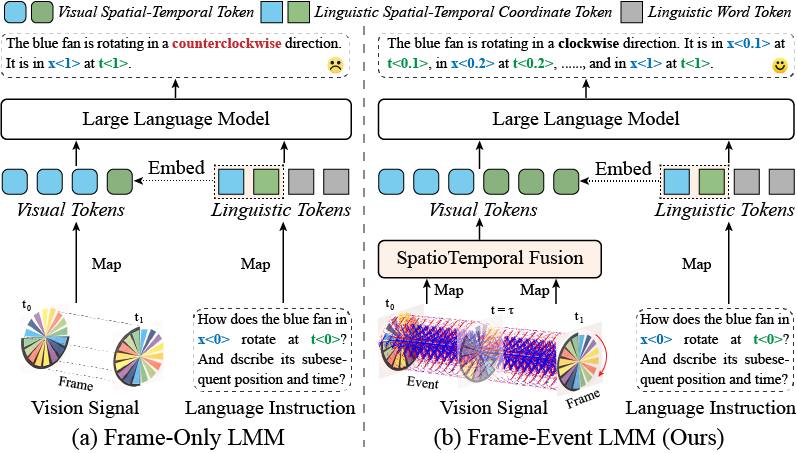

- 2025.06, Our event-based LLM paper is accepted to ICCV'25.

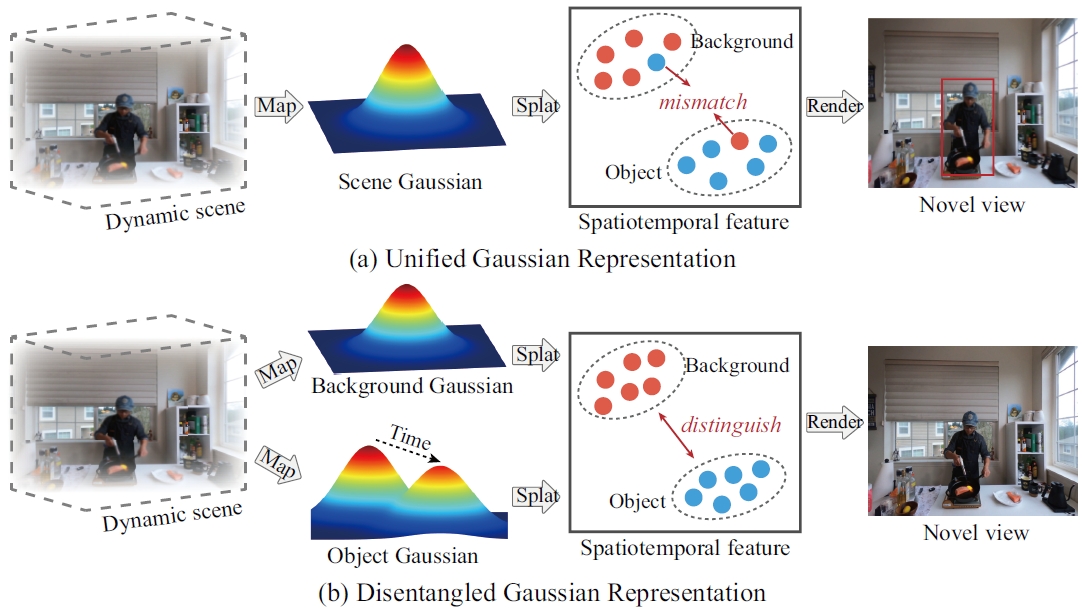

- 2025.06, Our event-based 4D Gaussian splatting paper is accepted to ICCV'25.

- 2025.05, I give a talk in Xi'an Jiaotong University.

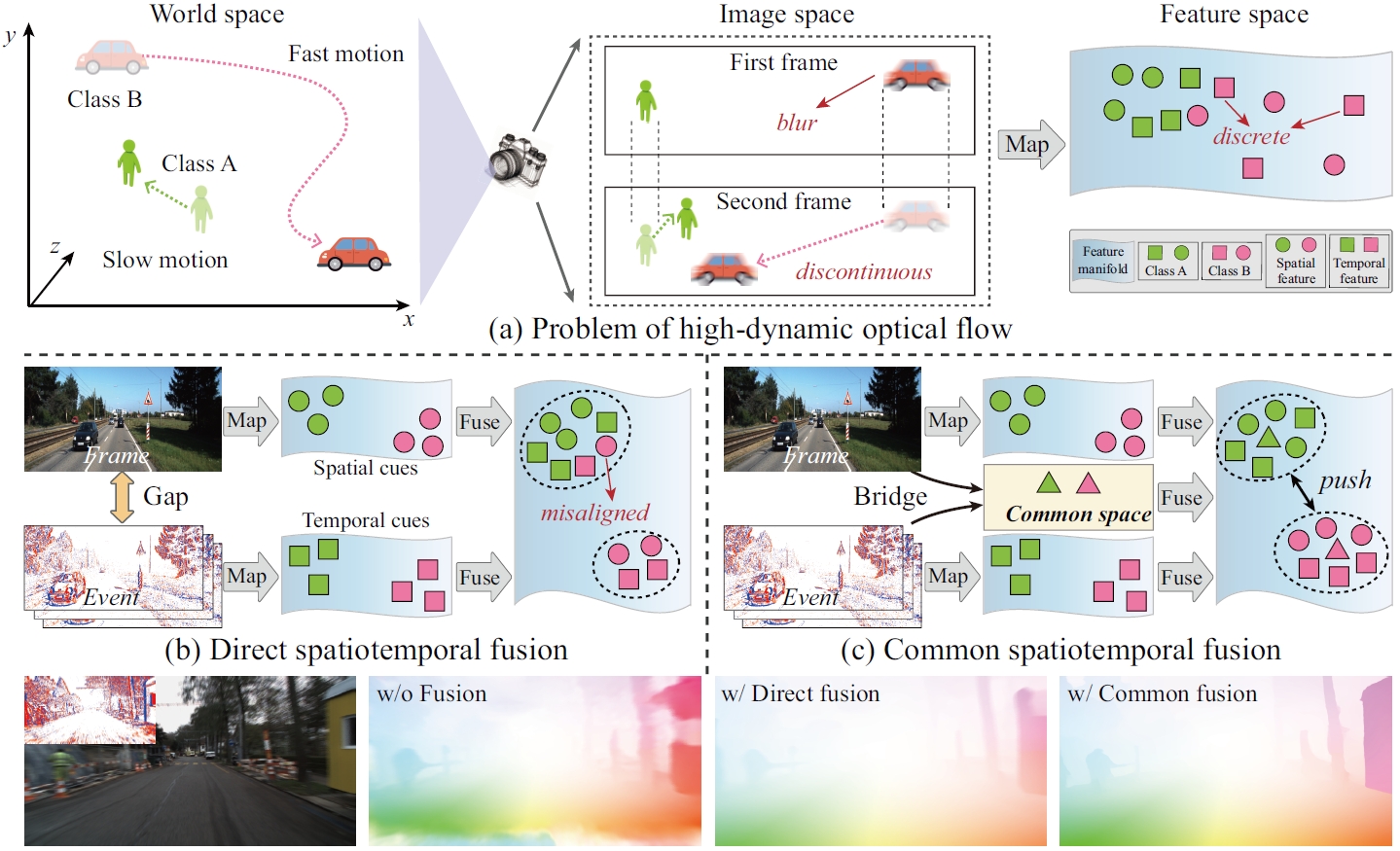

- 2025.02, Our event-based dense and continuous optical flow paper is accepted to CVPR'25.

- 2025.02, Our event-based frame interpolation paper is accepted to CVPR'25, congrating to Haoyue.

- 2025.02, Our event-based nighttime HDR imaging paper is accepted to TPAMI'25, congrating to Haoyue.

- 2024.12, I join the Computer Vision and Robotic Perception (CVRP) LAB as a postdoc research fellow.

- 2024.10, I pass my Ph.D. defense, and become a doctor of engineering.

- 2024.09, Our adverse weather optical flow paper is accepted to TPAMI'24.

- 2024.06, We have won 1st place in the track 'Text Recognition through Atmospheric Turbulence' in the CVPR'24 7th UG2+ Challenge.

- 2024.06, We have won 1st place in the track 'Coded Target Restoration through Atmospheric Turbulence' in the CVPR'24 7th UG2+ Challenge.

- 2024.02, Our VisMoFlow scene flow based on multimodal fusion is accepted to CVPR'24.

- 2024.02, Our NER-Net nighttime event reconstruction is accepted to CVPR'24.

- 2024.01, Our JSTR event-based moving object detection method is accepted to ICRA'24.

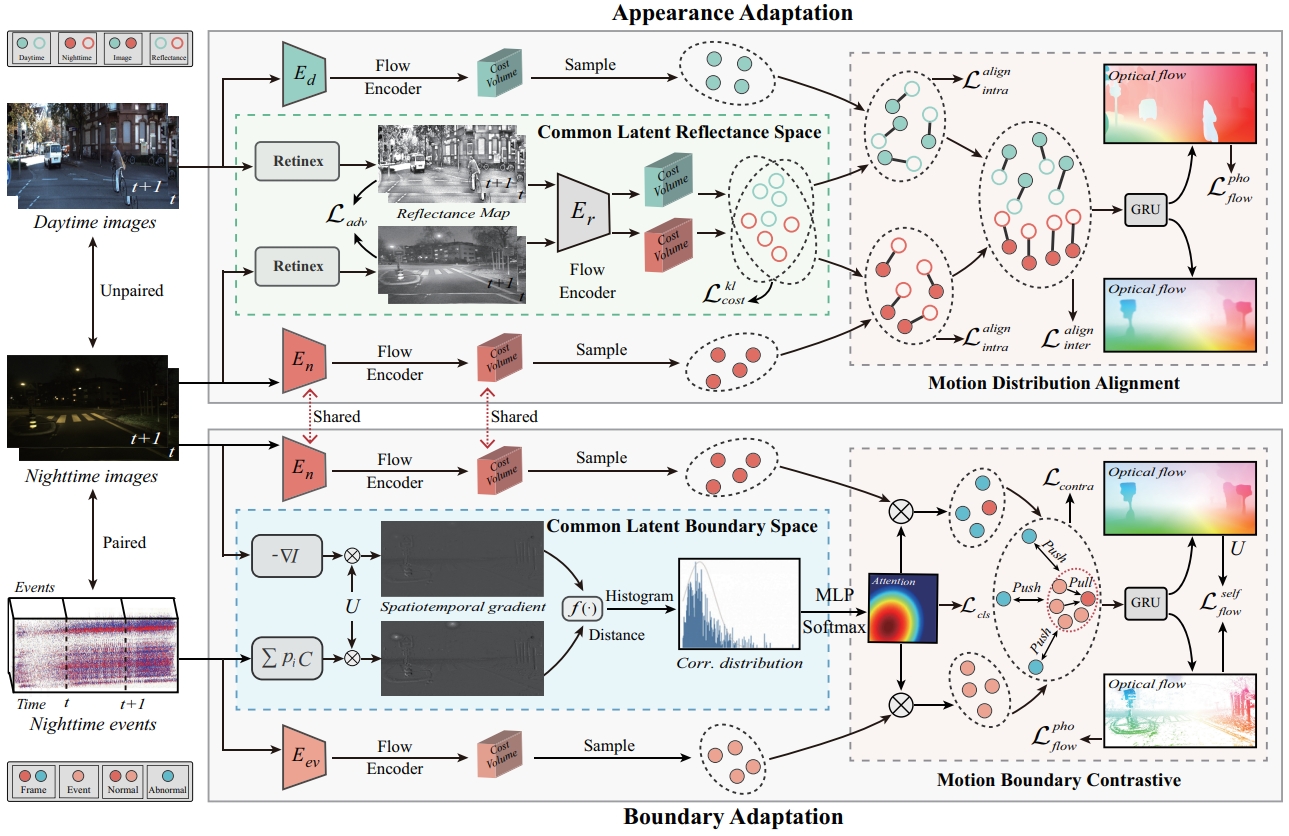

- 2024.01, Our ABDA-Flow optical flow under nighttime scene paper is accepted to ICLR'24 (Spotlight).

- 2023.02, Our UCDA-Flow optical flow under foggy scene paper is accepted to CVPR'23.

- 2022.11, Our HMBA-FlowNet optical flow under adverse weather paper is accepted to AAAI'23.

- 2021.01, Our JRGR derain paper is accepted to CVPR'21.

Researches

I like to explore how unmanned systems (e.g., uav and robotic) can emulate human behavior to relieve humans from manual tasks.

Similar to humans, unmanned systems perceive the world via vision sensors and then perform corresponding actions in response.

I realize that unmanned systems work well in ideal environments, but fail in adverse conditions, such as adverse weather, low light, fast motion and so on.

Focusing on these challenging scenes, I have devoted myself to a new research topic: Multimodal-IPUP, i.e., scene imaging, scene perception, scene understanding and scene planning.

1. Regarding the issue of unmanned system, I construct a RGB-Event-LiDAR-IMU multimodality hardware system for 2D/3D/4D adverse scenes, and build a large-scale multimodal dataset under various time and various weather. The research outputs have been published in arXiv. More will be open soon.

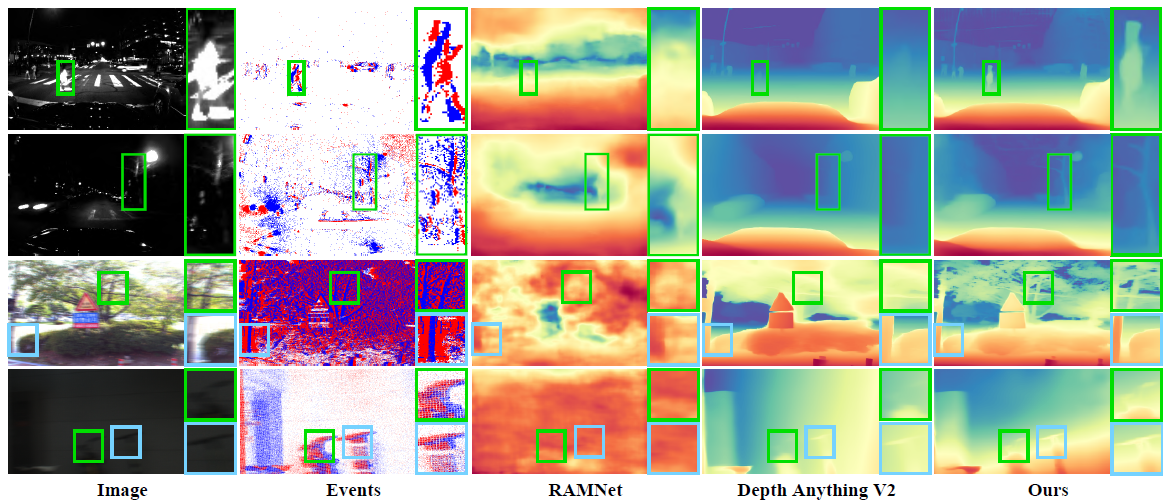

2. Regarding the issue of scene imaging, I propose multimodal fusion methods for 2D HDR imaging, 2D frame interpolation and 3D/4D scene reconstruction. The research outputs have been published in CVPR 2026, ICCV 2025, CVPR 2025, TPAMI 2025, CVPR 2024.

3. Regarding the issue of scene perception, I propose multimodal adaptation frameworks for 2D/3D motion flow and detection. The research outputs have been published in NeurIPS 2025, CVPR 2025, TPAMI 2024, ICLR 2024, ICRA 2024, CVPR 2023, AAAI 2023.

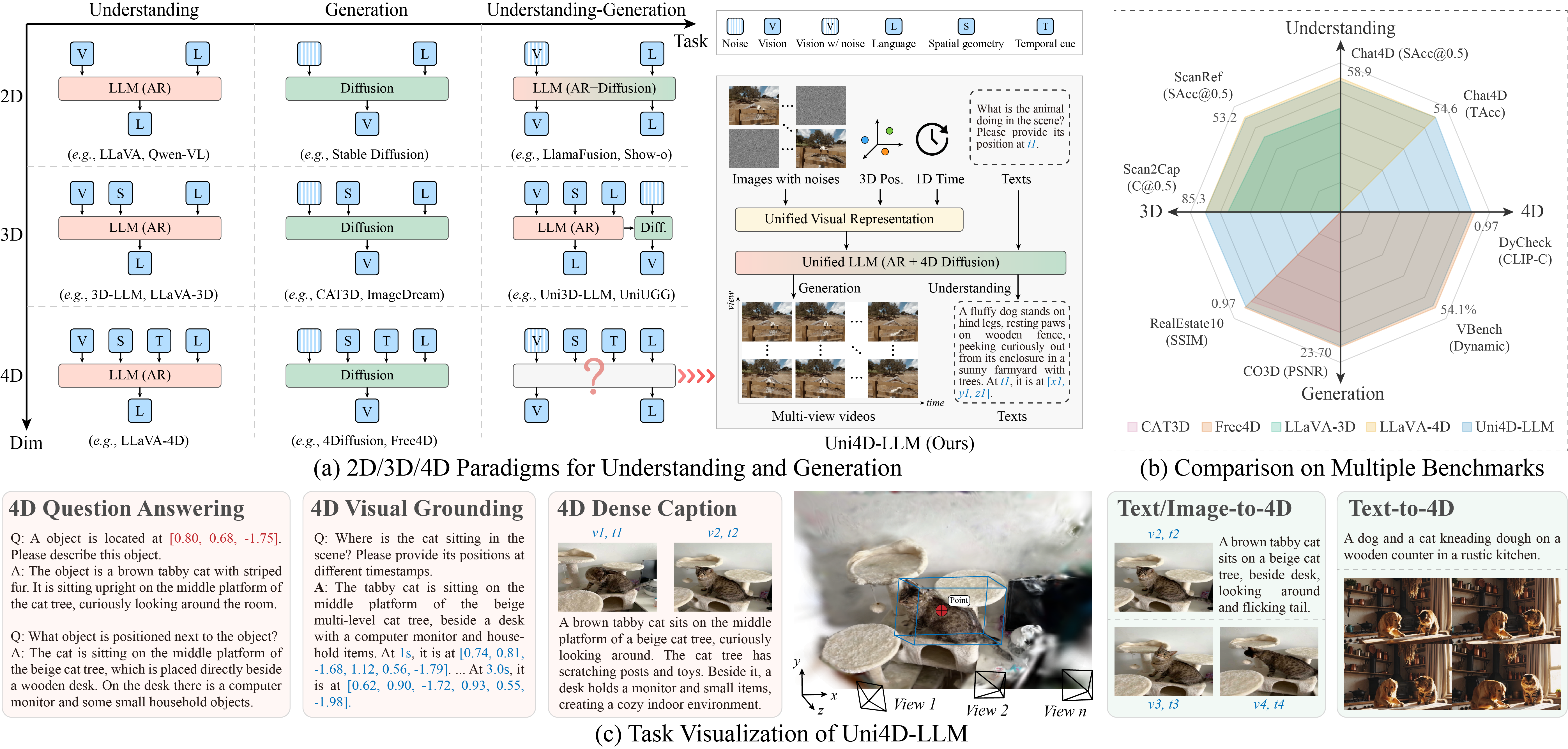

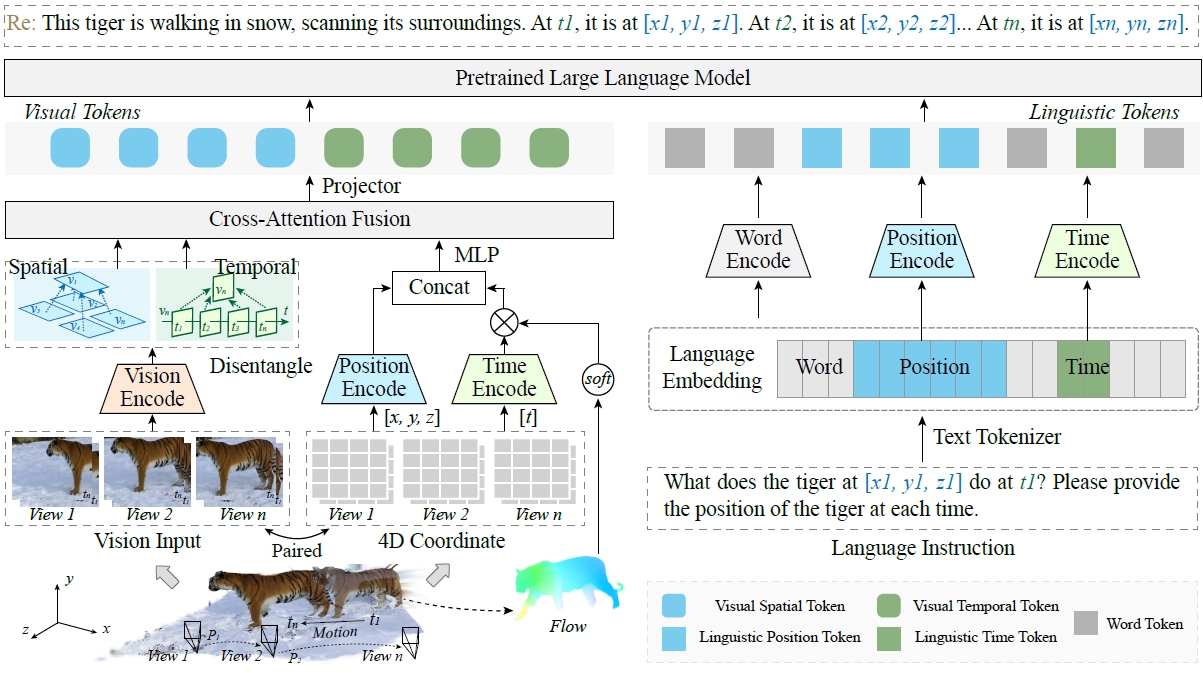

4. Regarding the issue of scene understanding & generation, I propose large multimodal vision-language models for 2D/3D/4D reasoning, VQA, dense caption, grounding and text-to-4D. The research outputs have been published in arXiv, arXiv, ICLR 2026, ICCV 2025. More will be coming soon.

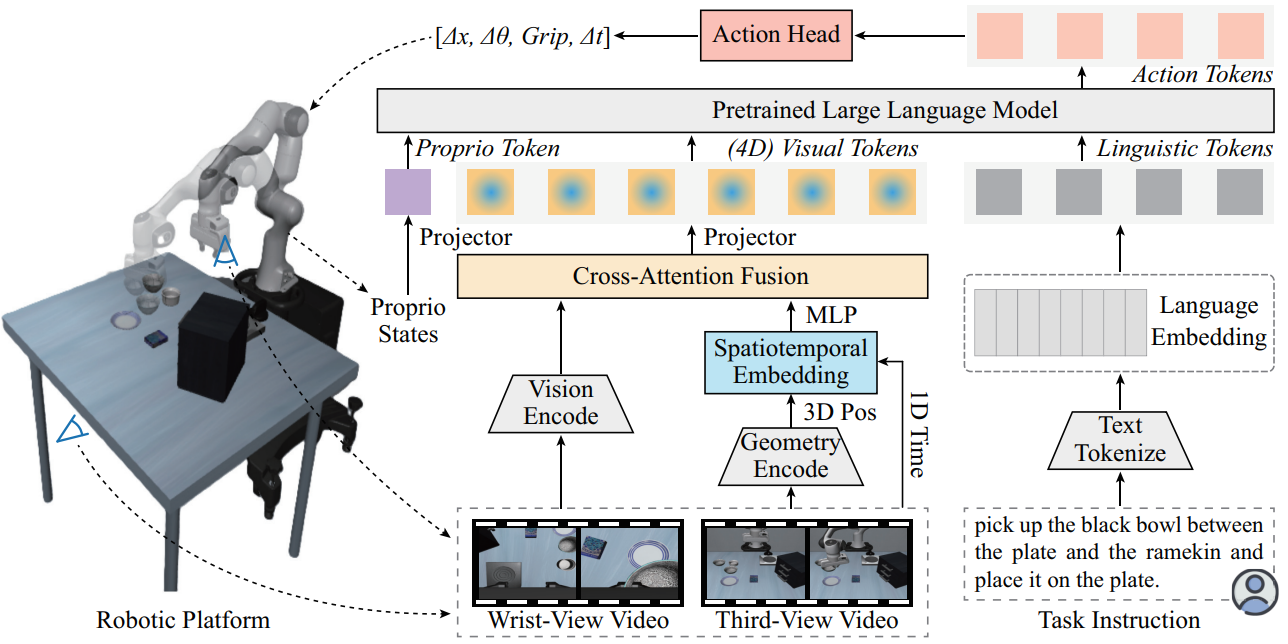

5. Regarding the issue of scene planning, I propose large multimodal vision-language-action models for spatiotemporal robotic manipulation. The research outputs have been published in arXiv.

1. Regarding the issue of unmanned system, I construct a RGB-Event-LiDAR-IMU multimodality hardware system for 2D/3D/4D adverse scenes, and build a large-scale multimodal dataset under various time and various weather. The research outputs have been published in arXiv. More will be open soon.

2. Regarding the issue of scene imaging, I propose multimodal fusion methods for 2D HDR imaging, 2D frame interpolation and 3D/4D scene reconstruction. The research outputs have been published in CVPR 2026, ICCV 2025, CVPR 2025, TPAMI 2025, CVPR 2024.

3. Regarding the issue of scene perception, I propose multimodal adaptation frameworks for 2D/3D motion flow and detection. The research outputs have been published in NeurIPS 2025, CVPR 2025, TPAMI 2024, ICLR 2024, ICRA 2024, CVPR 2023, AAAI 2023.

4. Regarding the issue of scene understanding & generation, I propose large multimodal vision-language models for 2D/3D/4D reasoning, VQA, dense caption, grounding and text-to-4D. The research outputs have been published in arXiv, arXiv, ICLR 2026, ICCV 2025. More will be coming soon.

5. Regarding the issue of scene planning, I propose large multimodal vision-language-action models for spatiotemporal robotic manipulation. The research outputs have been published in arXiv.

Publications (Google Scholar)

|

CoSEC: A Coaxial Stereo Event Camera Dataset for Autonomous Driving

Shihan Peng#, Hanyu Zhou#, Hao Dong, Zhiwei Shi, Haoyue Liu, Yuxing Duan, Yi Chang, Luxin Yan arXiv, 2024 [arXiv] |

Awards

Academic Services

Journal Reviewers:

TPAMI, TRO, IJCV, TIP, TCSVT, TMM, TIM, Sensors, MTAP, Mathematics.

Conference Reviewers: NeurIPS'25, CVPR'23-26, ICLR'25-26, ICML'25, ICCV'25, ACMMM'25, WACV'26, ECCV'24, AAAI'23-26, ICRA'24-25.

Conference Reviewers: NeurIPS'25, CVPR'23-26, ICLR'25-26, ICML'25, ICCV'25, ACMMM'25, WACV'26, ECCV'24, AAAI'23-26, ICRA'24-25.

Invited Talks

-

School of Computer Science, Shanghai Jiao Tong University (Online)Dec 2025

Title: Foundation Model for the Physical World

-

具身智能之心 (Online)Dec 2025

Title: 4D Foundation Model for Dynamic Physical World

-

ACM Multimedia Asia'25 Workshop, Kuala Lumpur, MalaysiaDec 2025

Title: Foundation Model for the Physical World

-

College of Computer Science, Sichuan University, Chengdu, ChinaNov 2025

Title: SpatioTemporal Perception and Understanding for the Physical World

-

School of Mathematics and Statistics, Xi'an Jiaotong University (Online)May 2025

Title: Multimodal-Based Imaging, Perception and Understanding in Adverse Scenes

© Hanyu Zhou | Last updated: October, 2025